Amid the pandemic, the police violence, and the riots, President Trump’s executive order (EO) against social media companies may have gone unnoticed. On May 28, Trump introduced a rule to limit social media platforms’ editorial power after Twitter tagged some of its tweets as “potentially misleading.” Although the order will likely be challenged in court, the debate over the role of tech companies in the public arena is more alive than ever.

While social media platforms are not liable for the content published in them, they are also expected to protect public discourse from fake news and harmful content. But not all choose to do so. With Twitter tagging Trump’s tweets and Facebook refusing to do so, the question remains—what should media platforms do?

In 1996, the Communications Decency Act (CDA) was approved as an effort to regulate pornographic content online. Specifically, the 230 section of the CDA protected Internet services from being judged as publishers. “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider,” reads the 230 section.

Social media platforms were no longer liable for the content published in them by third parties, as they were only acting as hosts without an editorial voice. This piece of legislation allowed social media apps to flourish because they no longer feared constant legal challenges to their content. Still, the protection of section 230 has its limits. For example, platform providers must remove criminal materials from the web as well as content that violates sex trafficking laws.

But in the past four years, the 230 section has come under scrutiny at a time when social media platforms are reaching adulthood. Fake news has flooded social media sites impacting a changing political landscape—Trump’s rise in the US and Brexit in the UK are prime examples of the effect of distorted news. Social media platforms started designing ways to limit the amount of fake content online while forbidding hate speech. Last year, Facebook and Instagram finally banned leading far-right extremists from their apps, such as Infowars’ founder Alex Jones (read Facebook’s ban on extremists is not enough to stop fake news, we need more). However, consistent efforts were frequently lacking and left many wondering whether social media platforms should be subject to the same legislation as other publishers.

In the past month, tech companies have struggled again to categorize President Trump’s recent publications. The debate arises from the definition of harmful and dangerous to society. What for some people should not be published, for some others is innocuous or perhaps funny. There is also the effect of the leverage of the author. The impact of a misleading statement or plane falsehoods from an unknown individual or a presumably respected politician is very different.

As an example of the difficulties judging, consider President Trump’s statement “when the looting starts, the shooting starts”—a comment made by Miami’s police chief in response to the 1967 protests, fueling state violence against mainly black demonstrators. On the one hand, Facebook decided to back off from taking action against Trump’s online comments. Mark Zuckerberg’s platform left it untouched, arguing that the post did not violate its policies and did not directly incite violence. In contrast, Twitter immediately tagged the tweet with a notice saying that the post glorified violence. Although the content can still be viewed, Twitter warns readers of what they are about to read.

The Facebook-Twitter debate is at the heart of section 230 of the CDA. Both tech giants sought to respect the right to express opinions on the web—and they rather not editorialize. However, freedom of speech has its limits, with the first one being inciting actions that could harm others. When a nation’s president promotes state violence by arguing that the shooting is going to start, the impact could be harmful. Does the comment inspire “actions that could harm others” not only from state actors, but most importantly from voters, followers, and citizens who believe violence could be justified if there’s looting? Twitter thinks so; Facebook does not. If Trump’s claim recommending to drink disinfectant as a way to kill Covid-19—a statement that had to be refuted by all news outlets in the country and chlorine manufacturers—had been made through social media instead of live television, probably both platforms would have agreed that it could potentially harm hundreds of citizens who believed the president.

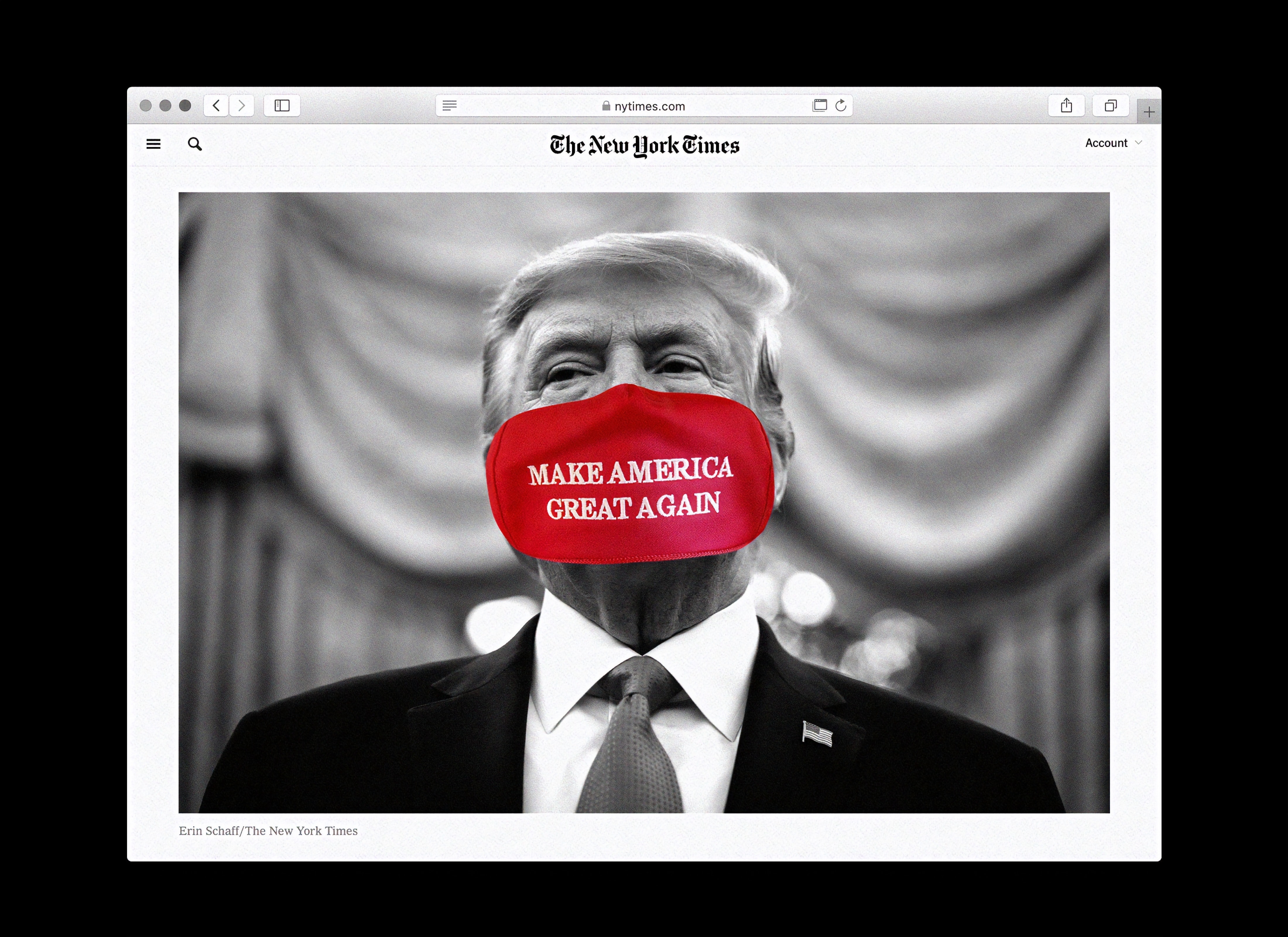

Twitter’s move labeling the president’s tweets as dangerous angered Trump, who retorted to the EO to retaliate. The order seeks to limit the 230 section of the CDA and highlight that social media platforms must be neutral. “A small handful of social media monopolies controls a vast portion of all public and private communications in the United States,” said Trump. He added: “They’ve had unchecked power to censor, restrict, edit, shape, hide, alter, virtually any form of communication between private citizens and large public audiences.”

Trump’s EO comes after far-right sectors claimed that social media platforms have a liberal bias and censor their freedom of speech. That assertion is based on the idea that fake news, racial slurs, threats, or violent outcries are just “opinions” and should be respected. But they are not only “opinions.” In our view and that of many others, they violate the limits of freedom of speech and endanger people’s lives. As such, they should be banned from social media platforms.

Legal experts say that the executive order will be challenged as its lawful basis is doubtful. However, the debate over what freedom of speech is and what’s the role of social media in protecting its users remains. At a minimum, these tech giants should ensure that the President’s comments or statements do not harm users. At the same time, they should also be expected to ban fake news from the platform as misinformation is also harmful to citizens’ wellbeing, even if they agree with the content politically. Trump’s comments fall in that arena, and, as such, Twitter made the right move in tagging them as misleading. Freedom of speech is not absolute—neither online nor in real life.

I agree more with Twitter, Mr president is inciting hate speech and abusing the power authorized by the people.

Trump’s EO comes after far-right sectors claimed that social media platforms have a liberal bias and censor their freedom of speech.

Si bien es cierto en las plataformas digitales existen muchas noticias con fuentes de dudosa procedencia, creo que la cancelación no es la mejor forma de combatirlas. La mejor manera de combatir estas fuentes engañosas es difundiendo fuentes en las que de verdad se pueden confiar.

I agree more with Twitter, Mr President incites hate speech and violates people’s approved power.

Muy buen artículo. Gracias por compartirlo.

Great and useful information.

The most ongoing topic of the days, best article so far, so beautifully written

Useful and Informative.

I agree more with Twitter,

Thanks for Sharing

I agree more with Twitter, Mr President incites hate speech and violates people’s approved power.

Thanks for Sharing

Great and useful information. Thankyou

I think it just another political movement for next round election

I think it’s believable due to our antiquated electoral university system that Trump could again be elected by a minority of voters.

Thank you for all the content offered by the page through your comments and information, it was a great help for some work